2. Preprocessing

Google Colab에서 작업

Load Packages

from PIL import Image

import re

import pandas as pd

import numpy as np

from tqdm import tqdm

from keras import layers, models, optimizers, losses, metrics

from keras.callbacks import ModelCheckpoint

from keras.applications import resnet50, inception_v3

import matplotlib.pyplot as plt

tqdm().pandas()

0it [00:00, ?it/s]

Load Datasets

total_df = pd.read_csv('/content/drive/Shared drives/personal/dataframe/total_df.csv')

poster_total = np.load('/content/drive/Shared drives/personal/poster/poster_total.npy')

total_df

| code | title_kor | title_eng | year | rating | rank | link | genre | |

|---|---|---|---|---|---|---|---|---|

| 0 | 171539 | 그린 북 | Green Book | 2018 | 9.59 | 12세 관람가 | https://movie.naver.com/movie/bi/mi/basic.nhn?... | ['드라마'] |

| 1 | 174830 | 가버나움 | Capharnaum | 2018 | 9.58 | 15세 관람가 | https://movie.naver.com/movie/bi/mi/basic.nhn?... | ['드라마'] |

| 2 | 151196 | 원더 | Wonder | 2017 | 9.49 | 전체 관람가 | https://movie.naver.com/movie/bi/mi/basic.nhn?... | ['드라마'] |

| 3 | 169240 | 아일라 | Ayla: The Daughter of War | 2017 | 9.48 | 15세 관람가 | https://movie.naver.com/movie/bi/mi/basic.nhn?... | ['드라마', '전쟁'] |

| 4 | 157243 | 당갈 | Dangal | 2016 | 9.47 | 12세 관람가 | https://movie.naver.com/movie/bi/mi/basic.nhn?... | ['드라마', '액션'] |

| ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 1395 | 41334 | 옹박 - 두번째 미션 | The Protector | 2005 | 8.28 | 15세 관람가 | https://movie.naver.com/movie/bi/mi/basic.nhn?... | ['액션', '범죄', '드라마', '스릴러'] |

| 1396 | 162249 | 램페이지 | RAMPAGE | 2018 | 8.28 | 12세 관람가 | https://movie.naver.com/movie/bi/mi/basic.nhn?... | ['액션', '모험'] |

| 1397 | 82473 | 캐리비안의 해적: 죽은 자는 말이 없다 | Pirates of the Caribbean: Dead Men Tell No Tales | 2017 | 8.28 | 12세 관람가 | https://movie.naver.com/movie/bi/mi/basic.nhn?... | ['액션', '모험', '코미디', '판타지'] |

| 1398 | 31606 | 킬러들의 수다 | Guns & Talks | 2001 | 8.28 | 15세 관람가 | https://movie.naver.com/movie/bi/mi/basic.nhn?... | ['액션', '드라마', '코미디'] |

| 1399 | 51082 | 7급 공무원 | 7th Grade Civil Servant | 2009 | 8.28 | 12세 관람가 | https://movie.naver.com/movie/bi/mi/basic.nhn?... | ['액션', '코미디'] |

1400 rows × 8 columns

poster_total

array([[[[ 0, 100, 116],

[ 0, 100, 116],

[ 0, 100, 117],

...,

[ 1, 115, 141],

[ 0, 116, 141],

[ 1, 117, 142]],

[[ 0, 100, 116],

[ 0, 100, 115],

[ 0, 101, 118],

...,

[ 0, 115, 141],

[ 0, 116, 141],

[ 0, 116, 141]],

[[ 0, 101, 116],

[ 0, 101, 116],

[ 0, 101, 118],

...,

[ 1, 117, 142],

[ 1, 117, 142],

[ 1, 117, 142]],

...,

[[ 0, 106, 130],

[ 1, 107, 131],

[ 0, 108, 132],

...,

[ 0, 105, 127],

[ 0, 105, 127],

[ 0, 105, 127]],

[[ 0, 106, 130],

[ 0, 106, 130],

[ 1, 107, 131],

...,

[ 1, 104, 127],

[ 1, 104, 126],

[ 1, 104, 127]],

[[ 0, 106, 130],

[ 0, 106, 130],

[ 1, 107, 131],

...,

[ 1, 104, 127],

[ 1, 104, 127],

[ 2, 104, 127]]],

[[[227, 221, 218],

[231, 231, 227],

[237, 238, 239],

...,

[ 87, 56, 93],

[ 89, 58, 94],

[ 89, 57, 92]],

[[231, 229, 226],

[232, 231, 227],

[237, 237, 236],

...,

[ 89, 58, 95],

[ 88, 58, 93],

[ 89, 58, 94]],

[[236, 235, 233],

[236, 236, 235],

[237, 237, 236],

...,

[ 88, 58, 96],

[ 88, 58, 95],

[ 89, 58, 94]],

...,

[[104, 129, 151],

[105, 128, 150],

[106, 130, 152],

...,

[125, 125, 84],

[123, 124, 94],

[ 97, 104, 102]],

[[103, 129, 151],

[102, 128, 150],

[104, 129, 152],

...,

[115, 118, 87],

[111, 115, 91],

[ 87, 96, 99]],

[[100, 129, 149],

[100, 128, 149],

[103, 128, 150],

...,

[106, 112, 85],

[ 97, 106, 86],

[ 79, 85, 93]]],

[[[242, 225, 217],

[241, 223, 213],

[241, 222, 212],

...,

[250, 250, 231],

[253, 252, 248],

[253, 253, 252]],

[[252, 252, 252],

[255, 255, 255],

[255, 255, 255],

...,

[255, 255, 255],

[255, 255, 255],

[255, 255, 255]],

[[247, 234, 229],

[255, 255, 255],

[255, 255, 255],

...,

[255, 255, 255],

[255, 255, 255],

[255, 255, 255]],

...,

[[100, 97, 86],

[ 98, 95, 85],

[ 96, 95, 85],

...,

[114, 102, 86],

[111, 98, 79],

[114, 97, 77]],

[[100, 99, 90],

[ 89, 88, 79],

[ 76, 73, 65],

...,

[114, 100, 81],

[118, 102, 83],

[119, 100, 80]],

[[ 90, 89, 83],

[ 89, 86, 80],

[ 88, 83, 76],

...,

[102, 90, 73],

[104, 92, 76],

[103, 88, 74]]],

...,

[[[107, 147, 155],

[106, 146, 153],

[107, 146, 154],

...,

[214, 222, 204],

[214, 223, 206],

[213, 222, 204]],

[[106, 146, 153],

[106, 147, 154],

[105, 147, 153],

...,

[215, 222, 204],

[214, 222, 206],

[216, 223, 204]],

[[106, 146, 154],

[107, 147, 154],

[104, 146, 152],

...,

[215, 222, 204],

[215, 222, 205],

[210, 220, 204]],

...,

[[ 16, 24, 34],

[ 16, 23, 32],

[ 16, 23, 32],

...,

[ 16, 21, 28],

[ 16, 20, 29],

[ 16, 20, 29]],

[[ 18, 23, 34],

[ 17, 23, 34],

[ 17, 23, 32],

...,

[ 17, 21, 30],

[ 14, 20, 30],

[ 15, 20, 30]],

[[ 18, 22, 33],

[ 18, 23, 35],

[ 17, 22, 33],

...,

[ 20, 22, 30],

[ 45, 29, 30],

[ 28, 22, 29]]],

[[[237, 254, 248],

[250, 250, 250],

[255, 249, 253],

...,

[252, 250, 250],

[254, 250, 250],

[254, 252, 254]],

[[242, 254, 249],

[252, 251, 251],

[244, 243, 245],

...,

[236, 244, 239],

[253, 253, 253],

[254, 252, 255]],

[[248, 253, 251],

[255, 253, 254],

[226, 232, 232],

...,

[163, 172, 164],

[238, 239, 241],

[254, 253, 255]],

...,

[[252, 252, 252],

[255, 255, 255],

[188, 188, 188],

...,

[113, 113, 113],

[220, 220, 220],

[255, 255, 255]],

[[251, 251, 251],

[251, 251, 251],

[249, 249, 249],

...,

[152, 152, 152],

[225, 225, 225],

[255, 255, 255]],

[[252, 252, 252],

[253, 253, 253],

[254, 254, 254],

...,

[252, 252, 252],

[252, 252, 252],

[255, 255, 255]]],

[[[255, 255, 255],

[255, 255, 255],

[255, 255, 255],

...,

[179, 47, 35],

[179, 47, 35],

[179, 47, 35]],

[[255, 255, 255],

[255, 255, 255],

[255, 255, 255],

...,

[180, 48, 33],

[179, 47, 33],

[179, 47, 33]],

[[255, 255, 255],

[255, 255, 255],

[255, 255, 255],

...,

[181, 49, 32],

[181, 49, 35],

[181, 49, 35]],

...,

[[ 96, 4, 8],

[ 58, 2, 1],

[ 15, 1, 0],

...,

[136, 27, 33],

[138, 27, 33],

[138, 27, 33]],

[[ 82, 4, 4],

[ 32, 1, 2],

[ 12, 2, 3],

...,

[136, 27, 32],

[138, 27, 33],

[138, 27, 33]],

[[ 52, 1, 3],

[ 10, 1, 2],

[ 38, 4, 6],

...,

[138, 27, 33],

[137, 26, 32],

[138, 27, 33]]]], dtype=uint8)

연도 확인하기

set(total_df['year'])

{'...',

'1936',

'1939',

'1940',

'1942',

'1953',

'1954',

'1959',

'1960',

'1965',

'1968',

'1972',

'1973',

'1974',

'1975',

'1976',

'1978',

'1979',

'1980',

'1981',

'1983',

'1984',

'1985',

'1986',

'1987',

'1988',

'1989',

'1990',

'1991',

'1992',

'1993',

'1994',

'1995',

'1996',

'1997',

'1998',

'1999',

'19세 관람가 도움말',

'2000',

'2001',

'2002',

'2003',

'2004',

'2005',

'2006',

'2007',

'2008',

'2009',

'201...',

'2010',

'2011',

'2012',

'2013',

'2014',

'2015',

'2016',

'2017',

'2018',

'2019',

'2020'}

이상치 제거

new_df = total_df.loc[(total_df['year'] != '...') & (total_df['year'] != '19세 관람가 도움말') &

(total_df['year'] != '201...')]

new_df['year'].value_counts()

2007 80

2017 72

2016 72

2006 71

2018 68

2004 67

2015 67

2014 64

2009 63

2012 63

2013 61

2010 60

2008 54

2003 53

2011 52

2019 49

2005 48

2002 36

2001 34

2000 29

1999 24

1994 22

1998 19

1995 18

1993 17

1997 17

1992 16

1996 13

1991 9

1989 8

1988 6

1987 6

1990 6

1986 5

2020 4

1984 3

1972 2

1979 2

1975 2

1981 2

1976 2

1973 2

1985 1

1959 1

1960 1

1980 1

1978 1

1954 1

1942 1

1974 1

1968 1

1940 1

1936 1

1983 1

1953 1

1939 1

1965 1

Name: year, dtype: int64

string to int

new_df['year'] = new_df['year'].astype(int)

/usr/local/lib/python3.6/dist-packages/ipykernel_launcher.py:1: SettingWithCopyWarning:

A value is trying to be set on a copy of a slice from a DataFrame.

Try using .loc[row_indexer,col_indexer] = value instead

See the caveats in the documentation: https://pandas.pydata.org/pandas-docs/stable/user_guide/indexing.html#returning-a-view-versus-a-copy

"""Entry point for launching an IPython kernel.

연대로 구분하기

def era(year):

# 2010년대 이후

if 2010 <= year:

return 0

# 2000년대

elif 2000 <= year < 2010:

return 1

# 2000년대 이전

else:

return 2

new_df['era'] = new_df['year'].apply(era)

/usr/local/lib/python3.6/dist-packages/ipykernel_launcher.py:1: SettingWithCopyWarning:

A value is trying to be set on a copy of a slice from a DataFrame.

Try using .loc[row_indexer,col_indexer] = value instead

See the caveats in the documentation: https://pandas.pydata.org/pandas-docs/stable/user_guide/indexing.html#returning-a-view-versus-a-copy

"""Entry point for launching an IPython kernel.

new_df['era'].value_counts()

0 632

1 535

2 216

Name: era, dtype: int64

onehot vector로 만들기

def to_onehot(sequences, dimension=3):

results = np.zeros((len(sequences), dimension))

for i, sequence in enumerate(sequences):

results[i, sequence] = 1.

return results

label_vector = to_onehot(new_df['era'])

label_vector

array([[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

...,

[1., 0., 0.],

[0., 1., 0.],

[0., 1., 0.]])

len(new_df)

1383

new_df.index

Int64Index([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9,

...

1390, 1391, 1392, 1393, 1394, 1395, 1396, 1397, 1398, 1399],

dtype='int64', length=1383)

inputs scaling

poster_scaled = poster_total[new_df.index].astype('float32') / 255

3. Modeling

train / test 나누기

train 60%, validation 20%, test 20%로 데이터를 나누면

총 1383개의 데이터 중 829개가 train, 277개가 validation, 277개가 test가 됨

# test 데이터로 20%를 뽑기 위한 랜덤 난수 생성

np.random.seed(303)

shuffled_index = np.random.permutation(1383)

shuffled_index

array([ 176, 420, 322, ..., 1153, 530, 571])

# train: 0 ~ 1105번 index (1106개)

# test: 1106 ~ 1383번 index (277개)

x_train = poster_scaled[shuffled_index[:1106]]

x_test = poster_scaled[shuffled_index[1106:]]

y_train = label_vector[shuffled_index[:1106]]

y_test = label_vector[shuffled_index[1106:]]

train data shuffling

np.random.seed(8989)

s_index = np.random.permutation(1106)

x_train = x_train[s_index]

y_train = y_train[s_index]

x_train.shape

(1106, 256, 256, 3)

y_train.shape

(1106, 3)

a. Basic CNN

def build_model():

model = models.Sequential()

model.add(layers.Conv2D(32, (3, 3), activation='relu', padding='same',

input_shape=(256, 256, 3)))

model.add(layers.Conv2D(32, (3, 3), activation='relu', padding='same'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(64, (3, 3), activation='relu', padding='same'))

model.add(layers.Conv2D(64, (3, 3), activation='relu', padding='same'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(128, (3, 3), activation='relu', padding='same'))

model.add(layers.Conv2D(128, (3, 3), activation='relu', padding='same'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Flatten())

model.add(layers.Dense(512, activation='relu'))

model.add(layers.Dense(3, activation='softmax'))

model.compile(optimizer=optimizers.RMSprop(lr=0.001),

loss='categorical_crossentropy',

metrics=['accuracy'])

return model

model = build_model()

model.name = 'default_model'

model.summary()

Model: "default_model"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_1 (Conv2D) (None, 256, 256, 32) 896

_________________________________________________________________

conv2d_2 (Conv2D) (None, 256, 256, 32) 9248

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 (None, 128, 128, 32) 0

_________________________________________________________________

conv2d_3 (Conv2D) (None, 128, 128, 64) 18496

_________________________________________________________________

conv2d_4 (Conv2D) (None, 128, 128, 64) 36928

_________________________________________________________________

max_pooling2d_2 (MaxPooling2 (None, 64, 64, 64) 0

_________________________________________________________________

conv2d_5 (Conv2D) (None, 64, 64, 128) 73856

_________________________________________________________________

conv2d_6 (Conv2D) (None, 64, 64, 128) 147584

_________________________________________________________________

max_pooling2d_3 (MaxPooling2 (None, 32, 32, 128) 0

_________________________________________________________________

flatten_1 (Flatten) (None, 131072) 0

_________________________________________________________________

dense_1 (Dense) (None, 512) 67109376

_________________________________________________________________

dense_2 (Dense) (None, 3) 1539

=================================================================

Total params: 67,397,923

Trainable params: 67,397,923

Non-trainable params: 0

_________________________________________________________________

def model_train(model, num_epochs):

filepath = '/content/drive/Shared drives/personal/weights/' + model.name + '.{epoch:02d}-{val_accuracy:.4f}.hdf5'

checkpoint = ModelCheckpoint(filepath, monitor='val_accuracy', verbose=0, save_weights_only=True, save_best_only=True, mode='auto', period=1)

model_history = model.fit(x_train, y_train, epochs=num_epochs, batch_size = 16, verbose=1, callbacks=[checkpoint], validation_split=0.25, shuffle=True)

return model.history

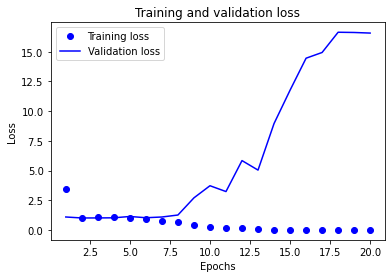

history = model_train(model, 20)

Train on 829 samples, validate on 277 samples

Epoch 1/20

829/829 [==============================] - 11s 13ms/step - loss: 3.4242 - accuracy: 0.4282 - val_loss: 1.0881 - val_accuracy: 0.3574

Epoch 2/20

829/829 [==============================] - 4s 4ms/step - loss: 1.0365 - accuracy: 0.4632 - val_loss: 1.0050 - val_accuracy: 0.4874

Epoch 3/20

829/829 [==============================] - 4s 4ms/step - loss: 1.0529 - accuracy: 0.4415 - val_loss: 1.0087 - val_accuracy: 0.4765

Epoch 4/20

829/829 [==============================] - 4s 4ms/step - loss: 1.1031 - accuracy: 0.4572 - val_loss: 1.0188 - val_accuracy: 0.4549

Epoch 5/20

829/829 [==============================] - 4s 4ms/step - loss: 1.0396 - accuracy: 0.5139 - val_loss: 1.1308 - val_accuracy: 0.4910

Epoch 6/20

829/829 [==============================] - 4s 4ms/step - loss: 0.9136 - accuracy: 0.5875 - val_loss: 1.0257 - val_accuracy: 0.5090

Epoch 7/20

829/829 [==============================] - 4s 4ms/step - loss: 0.7788 - accuracy: 0.6381 - val_loss: 1.0869 - val_accuracy: 0.4188

Epoch 8/20

829/829 [==============================] - 4s 4ms/step - loss: 0.6363 - accuracy: 0.7419 - val_loss: 1.2535 - val_accuracy: 0.4477

Epoch 9/20

829/829 [==============================] - 4s 4ms/step - loss: 0.3825 - accuracy: 0.8480 - val_loss: 2.6986 - val_accuracy: 0.4296

Epoch 10/20

829/829 [==============================] - 4s 4ms/step - loss: 0.2134 - accuracy: 0.9192 - val_loss: 3.7182 - val_accuracy: 0.4332

Epoch 11/20

829/829 [==============================] - 4s 4ms/step - loss: 0.1467 - accuracy: 0.9554 - val_loss: 3.2280 - val_accuracy: 0.4440

Epoch 12/20

829/829 [==============================] - 4s 4ms/step - loss: 0.1742 - accuracy: 0.9831 - val_loss: 5.8329 - val_accuracy: 0.4585

Epoch 13/20

829/829 [==============================] - 4s 4ms/step - loss: 0.1002 - accuracy: 0.9843 - val_loss: 5.0345 - val_accuracy: 0.4874

Epoch 14/20

829/829 [==============================] - 4s 4ms/step - loss: 0.0016 - accuracy: 1.0000 - val_loss: 8.9527 - val_accuracy: 0.4440

Epoch 15/20

829/829 [==============================] - 4s 4ms/step - loss: 2.7524e-05 - accuracy: 1.0000 - val_loss: 11.7579 - val_accuracy: 0.4910

Epoch 16/20

829/829 [==============================] - 4s 4ms/step - loss: 2.6138e-06 - accuracy: 1.0000 - val_loss: 14.4503 - val_accuracy: 0.4729

Epoch 17/20

829/829 [==============================] - 4s 4ms/step - loss: 1.7256e-08 - accuracy: 1.0000 - val_loss: 14.9324 - val_accuracy: 0.4874

Epoch 18/20

829/829 [==============================] - 4s 4ms/step - loss: 6.1833e-09 - accuracy: 1.0000 - val_loss: 16.6347 - val_accuracy: 0.4657

Epoch 19/20

829/829 [==============================] - 4s 4ms/step - loss: 2.8760e-10 - accuracy: 1.0000 - val_loss: 16.6152 - val_accuracy: 0.4693

Epoch 20/20

829/829 [==============================] - 4s 4ms/step - loss: 1.4380e-10 - accuracy: 1.0000 - val_loss: 16.5649 - val_accuracy: 0.4621

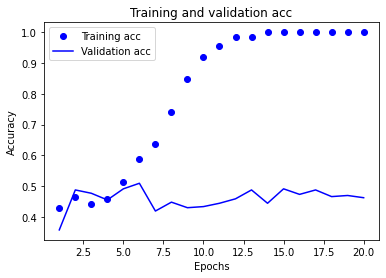

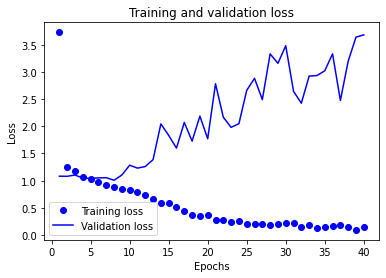

history_dict = history.history

loss = history_dict['loss']

val_loss = history_dict['val_loss']

epochs = range(1, len(loss) + 1)

plt.plot(epochs, loss, 'bo', label='Training loss')

plt.plot(epochs, val_loss, 'b', label='Validation loss')

plt.title('Training and validation loss')

plt.xlabel('Epochs')

plt.ylabel('Loss')

plt.legend()

plt.show()

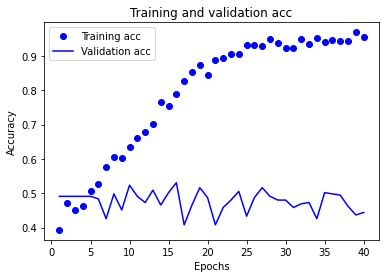

acc = history_dict['accuracy']

val_acc = history_dict['val_accuracy']

plt.plot(epochs, acc, 'bo', label='Training acc')

plt.plot(epochs, val_acc, 'b', label='Validation acc')

plt.title('Training and validation acc')

plt.xlabel('Epochs')

plt.ylabel('Accuracy')

plt.legend()

plt.show()

model.load_weights('/content/drive/Shared drives/personal/weights/default_model.06-0.5090.hdf5')

model.evaluate(x_test, y_test)

277/277 [==============================] - 1s 3ms/step

[0.9569806161770321, 0.505415141582489]

b. ResNet50

def build_resnet():

# keras 기본 모델 이용

base_model = resnet50.ResNet50(weights=None, include_top=False, input_shape=(256, 256 ,3))

output_model = base_model.output

flatten_model = layers.Flatten()(output_model)

dense_model = layers.Dense(512, activation='relu')(flatten_model)

final_model = layers.Dense(3, activation='softmax')(dense_model)

model = models.Model(inputs=base_model.input, outputs=final_model)

model.compile(optimizer=optimizers.RMSprop(lr=0.001),

loss='categorical_crossentropy',

metrics=['accuracy'])

return model

model = build_resnet()

/usr/local/lib/python3.6/dist-packages/keras_applications/resnet50.py:265: UserWarning: The output shape of `ResNet50(include_top=False)` has been changed since Keras 2.2.0.

warnings.warn('The output shape of `ResNet50(include_top=False)` '

model.name = 'resnet_model'

model.summary()

Model: "resnet_model"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_8 (InputLayer) (None, 256, 256, 3) 0

__________________________________________________________________________________________________

conv1_pad (ZeroPadding2D) (None, 262, 262, 3) 0 input_8[0][0]

__________________________________________________________________________________________________

conv1 (Conv2D) (None, 128, 128, 64) 9472 conv1_pad[0][0]

__________________________________________________________________________________________________

bn_conv1 (BatchNormalization) (None, 128, 128, 64) 256 conv1[0][0]

__________________________________________________________________________________________________

activation_246 (Activation) (None, 128, 128, 64) 0 bn_conv1[0][0]

__________________________________________________________________________________________________

pool1_pad (ZeroPadding2D) (None, 130, 130, 64) 0 activation_246[0][0]

__________________________________________________________________________________________________

max_pooling2d_17 (MaxPooling2D) (None, 64, 64, 64) 0 pool1_pad[0][0]

__________________________________________________________________________________________________

res2a_branch2a (Conv2D) (None, 64, 64, 64) 4160 max_pooling2d_17[0][0]

__________________________________________________________________________________________________

bn2a_branch2a (BatchNormalizati (None, 64, 64, 64) 256 res2a_branch2a[0][0]

__________________________________________________________________________________________________

activation_247 (Activation) (None, 64, 64, 64) 0 bn2a_branch2a[0][0]

__________________________________________________________________________________________________

res2a_branch2b (Conv2D) (None, 64, 64, 64) 36928 activation_247[0][0]

__________________________________________________________________________________________________

bn2a_branch2b (BatchNormalizati (None, 64, 64, 64) 256 res2a_branch2b[0][0]

__________________________________________________________________________________________________

activation_248 (Activation) (None, 64, 64, 64) 0 bn2a_branch2b[0][0]

__________________________________________________________________________________________________

res2a_branch2c (Conv2D) (None, 64, 64, 256) 16640 activation_248[0][0]

__________________________________________________________________________________________________

res2a_branch1 (Conv2D) (None, 64, 64, 256) 16640 max_pooling2d_17[0][0]

__________________________________________________________________________________________________

bn2a_branch2c (BatchNormalizati (None, 64, 64, 256) 1024 res2a_branch2c[0][0]

__________________________________________________________________________________________________

bn2a_branch1 (BatchNormalizatio (None, 64, 64, 256) 1024 res2a_branch1[0][0]

__________________________________________________________________________________________________

add_81 (Add) (None, 64, 64, 256) 0 bn2a_branch2c[0][0]

bn2a_branch1[0][0]

__________________________________________________________________________________________________

activation_249 (Activation) (None, 64, 64, 256) 0 add_81[0][0]

__________________________________________________________________________________________________

res2b_branch2a (Conv2D) (None, 64, 64, 64) 16448 activation_249[0][0]

__________________________________________________________________________________________________

bn2b_branch2a (BatchNormalizati (None, 64, 64, 64) 256 res2b_branch2a[0][0]

__________________________________________________________________________________________________

activation_250 (Activation) (None, 64, 64, 64) 0 bn2b_branch2a[0][0]

__________________________________________________________________________________________________

res2b_branch2b (Conv2D) (None, 64, 64, 64) 36928 activation_250[0][0]

__________________________________________________________________________________________________

bn2b_branch2b (BatchNormalizati (None, 64, 64, 64) 256 res2b_branch2b[0][0]

__________________________________________________________________________________________________

activation_251 (Activation) (None, 64, 64, 64) 0 bn2b_branch2b[0][0]

__________________________________________________________________________________________________

res2b_branch2c (Conv2D) (None, 64, 64, 256) 16640 activation_251[0][0]

__________________________________________________________________________________________________

bn2b_branch2c (BatchNormalizati (None, 64, 64, 256) 1024 res2b_branch2c[0][0]

__________________________________________________________________________________________________

add_82 (Add) (None, 64, 64, 256) 0 bn2b_branch2c[0][0]

activation_249[0][0]

__________________________________________________________________________________________________

activation_252 (Activation) (None, 64, 64, 256) 0 add_82[0][0]

__________________________________________________________________________________________________

res2c_branch2a (Conv2D) (None, 64, 64, 64) 16448 activation_252[0][0]

__________________________________________________________________________________________________

bn2c_branch2a (BatchNormalizati (None, 64, 64, 64) 256 res2c_branch2a[0][0]

__________________________________________________________________________________________________

activation_253 (Activation) (None, 64, 64, 64) 0 bn2c_branch2a[0][0]

__________________________________________________________________________________________________

res2c_branch2b (Conv2D) (None, 64, 64, 64) 36928 activation_253[0][0]

__________________________________________________________________________________________________

bn2c_branch2b (BatchNormalizati (None, 64, 64, 64) 256 res2c_branch2b[0][0]

__________________________________________________________________________________________________

activation_254 (Activation) (None, 64, 64, 64) 0 bn2c_branch2b[0][0]

__________________________________________________________________________________________________

res2c_branch2c (Conv2D) (None, 64, 64, 256) 16640 activation_254[0][0]

__________________________________________________________________________________________________

bn2c_branch2c (BatchNormalizati (None, 64, 64, 256) 1024 res2c_branch2c[0][0]

__________________________________________________________________________________________________

add_83 (Add) (None, 64, 64, 256) 0 bn2c_branch2c[0][0]

activation_252[0][0]

__________________________________________________________________________________________________

activation_255 (Activation) (None, 64, 64, 256) 0 add_83[0][0]

__________________________________________________________________________________________________

res3a_branch2a (Conv2D) (None, 32, 32, 128) 32896 activation_255[0][0]

__________________________________________________________________________________________________

bn3a_branch2a (BatchNormalizati (None, 32, 32, 128) 512 res3a_branch2a[0][0]

__________________________________________________________________________________________________

activation_256 (Activation) (None, 32, 32, 128) 0 bn3a_branch2a[0][0]

__________________________________________________________________________________________________

res3a_branch2b (Conv2D) (None, 32, 32, 128) 147584 activation_256[0][0]

__________________________________________________________________________________________________

bn3a_branch2b (BatchNormalizati (None, 32, 32, 128) 512 res3a_branch2b[0][0]

__________________________________________________________________________________________________

activation_257 (Activation) (None, 32, 32, 128) 0 bn3a_branch2b[0][0]

__________________________________________________________________________________________________

res3a_branch2c (Conv2D) (None, 32, 32, 512) 66048 activation_257[0][0]

__________________________________________________________________________________________________

res3a_branch1 (Conv2D) (None, 32, 32, 512) 131584 activation_255[0][0]

__________________________________________________________________________________________________

bn3a_branch2c (BatchNormalizati (None, 32, 32, 512) 2048 res3a_branch2c[0][0]

__________________________________________________________________________________________________

bn3a_branch1 (BatchNormalizatio (None, 32, 32, 512) 2048 res3a_branch1[0][0]

__________________________________________________________________________________________________

add_84 (Add) (None, 32, 32, 512) 0 bn3a_branch2c[0][0]

bn3a_branch1[0][0]

__________________________________________________________________________________________________

activation_258 (Activation) (None, 32, 32, 512) 0 add_84[0][0]

__________________________________________________________________________________________________

res3b_branch2a (Conv2D) (None, 32, 32, 128) 65664 activation_258[0][0]

__________________________________________________________________________________________________

bn3b_branch2a (BatchNormalizati (None, 32, 32, 128) 512 res3b_branch2a[0][0]

__________________________________________________________________________________________________

activation_259 (Activation) (None, 32, 32, 128) 0 bn3b_branch2a[0][0]

__________________________________________________________________________________________________

res3b_branch2b (Conv2D) (None, 32, 32, 128) 147584 activation_259[0][0]

__________________________________________________________________________________________________

bn3b_branch2b (BatchNormalizati (None, 32, 32, 128) 512 res3b_branch2b[0][0]

__________________________________________________________________________________________________

activation_260 (Activation) (None, 32, 32, 128) 0 bn3b_branch2b[0][0]

__________________________________________________________________________________________________

res3b_branch2c (Conv2D) (None, 32, 32, 512) 66048 activation_260[0][0]

__________________________________________________________________________________________________

bn3b_branch2c (BatchNormalizati (None, 32, 32, 512) 2048 res3b_branch2c[0][0]

__________________________________________________________________________________________________

add_85 (Add) (None, 32, 32, 512) 0 bn3b_branch2c[0][0]

activation_258[0][0]

__________________________________________________________________________________________________

activation_261 (Activation) (None, 32, 32, 512) 0 add_85[0][0]

__________________________________________________________________________________________________

res3c_branch2a (Conv2D) (None, 32, 32, 128) 65664 activation_261[0][0]

__________________________________________________________________________________________________

bn3c_branch2a (BatchNormalizati (None, 32, 32, 128) 512 res3c_branch2a[0][0]

__________________________________________________________________________________________________

activation_262 (Activation) (None, 32, 32, 128) 0 bn3c_branch2a[0][0]

__________________________________________________________________________________________________

res3c_branch2b (Conv2D) (None, 32, 32, 128) 147584 activation_262[0][0]

__________________________________________________________________________________________________

bn3c_branch2b (BatchNormalizati (None, 32, 32, 128) 512 res3c_branch2b[0][0]

__________________________________________________________________________________________________

activation_263 (Activation) (None, 32, 32, 128) 0 bn3c_branch2b[0][0]

__________________________________________________________________________________________________

res3c_branch2c (Conv2D) (None, 32, 32, 512) 66048 activation_263[0][0]

__________________________________________________________________________________________________

bn3c_branch2c (BatchNormalizati (None, 32, 32, 512) 2048 res3c_branch2c[0][0]

__________________________________________________________________________________________________

add_86 (Add) (None, 32, 32, 512) 0 bn3c_branch2c[0][0]

activation_261[0][0]

__________________________________________________________________________________________________

activation_264 (Activation) (None, 32, 32, 512) 0 add_86[0][0]

__________________________________________________________________________________________________

res3d_branch2a (Conv2D) (None, 32, 32, 128) 65664 activation_264[0][0]

__________________________________________________________________________________________________

bn3d_branch2a (BatchNormalizati (None, 32, 32, 128) 512 res3d_branch2a[0][0]

__________________________________________________________________________________________________

activation_265 (Activation) (None, 32, 32, 128) 0 bn3d_branch2a[0][0]

__________________________________________________________________________________________________

res3d_branch2b (Conv2D) (None, 32, 32, 128) 147584 activation_265[0][0]

__________________________________________________________________________________________________

bn3d_branch2b (BatchNormalizati (None, 32, 32, 128) 512 res3d_branch2b[0][0]

__________________________________________________________________________________________________

activation_266 (Activation) (None, 32, 32, 128) 0 bn3d_branch2b[0][0]

__________________________________________________________________________________________________

res3d_branch2c (Conv2D) (None, 32, 32, 512) 66048 activation_266[0][0]

__________________________________________________________________________________________________

bn3d_branch2c (BatchNormalizati (None, 32, 32, 512) 2048 res3d_branch2c[0][0]

__________________________________________________________________________________________________

add_87 (Add) (None, 32, 32, 512) 0 bn3d_branch2c[0][0]

activation_264[0][0]

__________________________________________________________________________________________________

activation_267 (Activation) (None, 32, 32, 512) 0 add_87[0][0]

__________________________________________________________________________________________________

res4a_branch2a (Conv2D) (None, 16, 16, 256) 131328 activation_267[0][0]

__________________________________________________________________________________________________

bn4a_branch2a (BatchNormalizati (None, 16, 16, 256) 1024 res4a_branch2a[0][0]

__________________________________________________________________________________________________

activation_268 (Activation) (None, 16, 16, 256) 0 bn4a_branch2a[0][0]

__________________________________________________________________________________________________

res4a_branch2b (Conv2D) (None, 16, 16, 256) 590080 activation_268[0][0]

__________________________________________________________________________________________________

bn4a_branch2b (BatchNormalizati (None, 16, 16, 256) 1024 res4a_branch2b[0][0]

__________________________________________________________________________________________________

activation_269 (Activation) (None, 16, 16, 256) 0 bn4a_branch2b[0][0]

__________________________________________________________________________________________________

res4a_branch2c (Conv2D) (None, 16, 16, 1024) 263168 activation_269[0][0]

__________________________________________________________________________________________________

res4a_branch1 (Conv2D) (None, 16, 16, 1024) 525312 activation_267[0][0]

__________________________________________________________________________________________________

bn4a_branch2c (BatchNormalizati (None, 16, 16, 1024) 4096 res4a_branch2c[0][0]

__________________________________________________________________________________________________

bn4a_branch1 (BatchNormalizatio (None, 16, 16, 1024) 4096 res4a_branch1[0][0]

__________________________________________________________________________________________________

add_88 (Add) (None, 16, 16, 1024) 0 bn4a_branch2c[0][0]

bn4a_branch1[0][0]

__________________________________________________________________________________________________

activation_270 (Activation) (None, 16, 16, 1024) 0 add_88[0][0]

__________________________________________________________________________________________________

res4b_branch2a (Conv2D) (None, 16, 16, 256) 262400 activation_270[0][0]

__________________________________________________________________________________________________

bn4b_branch2a (BatchNormalizati (None, 16, 16, 256) 1024 res4b_branch2a[0][0]

__________________________________________________________________________________________________

activation_271 (Activation) (None, 16, 16, 256) 0 bn4b_branch2a[0][0]

__________________________________________________________________________________________________

res4b_branch2b (Conv2D) (None, 16, 16, 256) 590080 activation_271[0][0]

__________________________________________________________________________________________________

bn4b_branch2b (BatchNormalizati (None, 16, 16, 256) 1024 res4b_branch2b[0][0]

__________________________________________________________________________________________________

activation_272 (Activation) (None, 16, 16, 256) 0 bn4b_branch2b[0][0]

__________________________________________________________________________________________________

res4b_branch2c (Conv2D) (None, 16, 16, 1024) 263168 activation_272[0][0]

__________________________________________________________________________________________________

bn4b_branch2c (BatchNormalizati (None, 16, 16, 1024) 4096 res4b_branch2c[0][0]

__________________________________________________________________________________________________

add_89 (Add) (None, 16, 16, 1024) 0 bn4b_branch2c[0][0]

activation_270[0][0]

__________________________________________________________________________________________________

activation_273 (Activation) (None, 16, 16, 1024) 0 add_89[0][0]

__________________________________________________________________________________________________

res4c_branch2a (Conv2D) (None, 16, 16, 256) 262400 activation_273[0][0]

__________________________________________________________________________________________________

bn4c_branch2a (BatchNormalizati (None, 16, 16, 256) 1024 res4c_branch2a[0][0]

__________________________________________________________________________________________________

activation_274 (Activation) (None, 16, 16, 256) 0 bn4c_branch2a[0][0]

__________________________________________________________________________________________________

res4c_branch2b (Conv2D) (None, 16, 16, 256) 590080 activation_274[0][0]

__________________________________________________________________________________________________

bn4c_branch2b (BatchNormalizati (None, 16, 16, 256) 1024 res4c_branch2b[0][0]

__________________________________________________________________________________________________

activation_275 (Activation) (None, 16, 16, 256) 0 bn4c_branch2b[0][0]

__________________________________________________________________________________________________

res4c_branch2c (Conv2D) (None, 16, 16, 1024) 263168 activation_275[0][0]

__________________________________________________________________________________________________

bn4c_branch2c (BatchNormalizati (None, 16, 16, 1024) 4096 res4c_branch2c[0][0]

__________________________________________________________________________________________________

add_90 (Add) (None, 16, 16, 1024) 0 bn4c_branch2c[0][0]

activation_273[0][0]

__________________________________________________________________________________________________

activation_276 (Activation) (None, 16, 16, 1024) 0 add_90[0][0]

__________________________________________________________________________________________________

res4d_branch2a (Conv2D) (None, 16, 16, 256) 262400 activation_276[0][0]

__________________________________________________________________________________________________

bn4d_branch2a (BatchNormalizati (None, 16, 16, 256) 1024 res4d_branch2a[0][0]

__________________________________________________________________________________________________

activation_277 (Activation) (None, 16, 16, 256) 0 bn4d_branch2a[0][0]

__________________________________________________________________________________________________

res4d_branch2b (Conv2D) (None, 16, 16, 256) 590080 activation_277[0][0]

__________________________________________________________________________________________________

bn4d_branch2b (BatchNormalizati (None, 16, 16, 256) 1024 res4d_branch2b[0][0]

__________________________________________________________________________________________________

activation_278 (Activation) (None, 16, 16, 256) 0 bn4d_branch2b[0][0]

__________________________________________________________________________________________________

res4d_branch2c (Conv2D) (None, 16, 16, 1024) 263168 activation_278[0][0]

__________________________________________________________________________________________________

bn4d_branch2c (BatchNormalizati (None, 16, 16, 1024) 4096 res4d_branch2c[0][0]

__________________________________________________________________________________________________

add_91 (Add) (None, 16, 16, 1024) 0 bn4d_branch2c[0][0]

activation_276[0][0]

__________________________________________________________________________________________________

activation_279 (Activation) (None, 16, 16, 1024) 0 add_91[0][0]

__________________________________________________________________________________________________

res4e_branch2a (Conv2D) (None, 16, 16, 256) 262400 activation_279[0][0]

__________________________________________________________________________________________________

bn4e_branch2a (BatchNormalizati (None, 16, 16, 256) 1024 res4e_branch2a[0][0]

__________________________________________________________________________________________________

activation_280 (Activation) (None, 16, 16, 256) 0 bn4e_branch2a[0][0]

__________________________________________________________________________________________________

res4e_branch2b (Conv2D) (None, 16, 16, 256) 590080 activation_280[0][0]

__________________________________________________________________________________________________

bn4e_branch2b (BatchNormalizati (None, 16, 16, 256) 1024 res4e_branch2b[0][0]

__________________________________________________________________________________________________

activation_281 (Activation) (None, 16, 16, 256) 0 bn4e_branch2b[0][0]

__________________________________________________________________________________________________

res4e_branch2c (Conv2D) (None, 16, 16, 1024) 263168 activation_281[0][0]

__________________________________________________________________________________________________

bn4e_branch2c (BatchNormalizati (None, 16, 16, 1024) 4096 res4e_branch2c[0][0]

__________________________________________________________________________________________________

add_92 (Add) (None, 16, 16, 1024) 0 bn4e_branch2c[0][0]

activation_279[0][0]

__________________________________________________________________________________________________

activation_282 (Activation) (None, 16, 16, 1024) 0 add_92[0][0]

__________________________________________________________________________________________________

res4f_branch2a (Conv2D) (None, 16, 16, 256) 262400 activation_282[0][0]

__________________________________________________________________________________________________

bn4f_branch2a (BatchNormalizati (None, 16, 16, 256) 1024 res4f_branch2a[0][0]

__________________________________________________________________________________________________

activation_283 (Activation) (None, 16, 16, 256) 0 bn4f_branch2a[0][0]

__________________________________________________________________________________________________

res4f_branch2b (Conv2D) (None, 16, 16, 256) 590080 activation_283[0][0]

__________________________________________________________________________________________________

bn4f_branch2b (BatchNormalizati (None, 16, 16, 256) 1024 res4f_branch2b[0][0]

__________________________________________________________________________________________________

activation_284 (Activation) (None, 16, 16, 256) 0 bn4f_branch2b[0][0]

__________________________________________________________________________________________________

res4f_branch2c (Conv2D) (None, 16, 16, 1024) 263168 activation_284[0][0]

__________________________________________________________________________________________________

bn4f_branch2c (BatchNormalizati (None, 16, 16, 1024) 4096 res4f_branch2c[0][0]

__________________________________________________________________________________________________

add_93 (Add) (None, 16, 16, 1024) 0 bn4f_branch2c[0][0]

activation_282[0][0]

__________________________________________________________________________________________________

activation_285 (Activation) (None, 16, 16, 1024) 0 add_93[0][0]

__________________________________________________________________________________________________

res5a_branch2a (Conv2D) (None, 8, 8, 512) 524800 activation_285[0][0]

__________________________________________________________________________________________________

bn5a_branch2a (BatchNormalizati (None, 8, 8, 512) 2048 res5a_branch2a[0][0]

__________________________________________________________________________________________________

activation_286 (Activation) (None, 8, 8, 512) 0 bn5a_branch2a[0][0]

__________________________________________________________________________________________________

res5a_branch2b (Conv2D) (None, 8, 8, 512) 2359808 activation_286[0][0]

__________________________________________________________________________________________________

bn5a_branch2b (BatchNormalizati (None, 8, 8, 512) 2048 res5a_branch2b[0][0]

__________________________________________________________________________________________________

activation_287 (Activation) (None, 8, 8, 512) 0 bn5a_branch2b[0][0]

__________________________________________________________________________________________________

res5a_branch2c (Conv2D) (None, 8, 8, 2048) 1050624 activation_287[0][0]

__________________________________________________________________________________________________

res5a_branch1 (Conv2D) (None, 8, 8, 2048) 2099200 activation_285[0][0]

__________________________________________________________________________________________________

bn5a_branch2c (BatchNormalizati (None, 8, 8, 2048) 8192 res5a_branch2c[0][0]

__________________________________________________________________________________________________

bn5a_branch1 (BatchNormalizatio (None, 8, 8, 2048) 8192 res5a_branch1[0][0]

__________________________________________________________________________________________________

add_94 (Add) (None, 8, 8, 2048) 0 bn5a_branch2c[0][0]

bn5a_branch1[0][0]

__________________________________________________________________________________________________

activation_288 (Activation) (None, 8, 8, 2048) 0 add_94[0][0]

__________________________________________________________________________________________________

res5b_branch2a (Conv2D) (None, 8, 8, 512) 1049088 activation_288[0][0]

__________________________________________________________________________________________________

bn5b_branch2a (BatchNormalizati (None, 8, 8, 512) 2048 res5b_branch2a[0][0]

__________________________________________________________________________________________________

activation_289 (Activation) (None, 8, 8, 512) 0 bn5b_branch2a[0][0]

__________________________________________________________________________________________________

res5b_branch2b (Conv2D) (None, 8, 8, 512) 2359808 activation_289[0][0]

__________________________________________________________________________________________________

bn5b_branch2b (BatchNormalizati (None, 8, 8, 512) 2048 res5b_branch2b[0][0]

__________________________________________________________________________________________________

activation_290 (Activation) (None, 8, 8, 512) 0 bn5b_branch2b[0][0]

__________________________________________________________________________________________________

res5b_branch2c (Conv2D) (None, 8, 8, 2048) 1050624 activation_290[0][0]

__________________________________________________________________________________________________

bn5b_branch2c (BatchNormalizati (None, 8, 8, 2048) 8192 res5b_branch2c[0][0]

__________________________________________________________________________________________________

add_95 (Add) (None, 8, 8, 2048) 0 bn5b_branch2c[0][0]

activation_288[0][0]

__________________________________________________________________________________________________

activation_291 (Activation) (None, 8, 8, 2048) 0 add_95[0][0]

__________________________________________________________________________________________________

res5c_branch2a (Conv2D) (None, 8, 8, 512) 1049088 activation_291[0][0]

__________________________________________________________________________________________________

bn5c_branch2a (BatchNormalizati (None, 8, 8, 512) 2048 res5c_branch2a[0][0]

__________________________________________________________________________________________________

activation_292 (Activation) (None, 8, 8, 512) 0 bn5c_branch2a[0][0]

__________________________________________________________________________________________________

res5c_branch2b (Conv2D) (None, 8, 8, 512) 2359808 activation_292[0][0]

__________________________________________________________________________________________________

bn5c_branch2b (BatchNormalizati (None, 8, 8, 512) 2048 res5c_branch2b[0][0]

__________________________________________________________________________________________________

activation_293 (Activation) (None, 8, 8, 512) 0 bn5c_branch2b[0][0]

__________________________________________________________________________________________________

res5c_branch2c (Conv2D) (None, 8, 8, 2048) 1050624 activation_293[0][0]

__________________________________________________________________________________________________

bn5c_branch2c (BatchNormalizati (None, 8, 8, 2048) 8192 res5c_branch2c[0][0]

__________________________________________________________________________________________________

add_96 (Add) (None, 8, 8, 2048) 0 bn5c_branch2c[0][0]

activation_291[0][0]

__________________________________________________________________________________________________

activation_294 (Activation) (None, 8, 8, 2048) 0 add_96[0][0]

__________________________________________________________________________________________________

flatten_5 (Flatten) (None, 131072) 0 activation_294[0][0]

__________________________________________________________________________________________________

dense_12 (Dense) (None, 512) 67109376 flatten_5[0][0]

__________________________________________________________________________________________________

dense_13 (Dense) (None, 3) 1539 dense_12[0][0]

==================================================================================================

Total params: 90,698,627

Trainable params: 90,645,507

Non-trainable params: 53,120

__________________________________________________________________________________________________

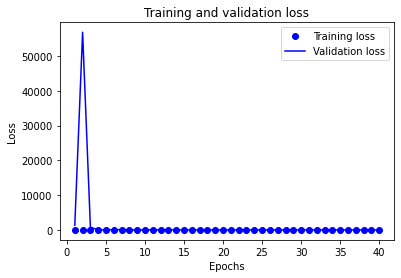

history = model_train(model, 40)

Train on 829 samples, validate on 277 samples

Epoch 1/40

829/829 [==============================] - 27s 32ms/step - loss: 110.0953 - accuracy: 0.3390 - val_loss: 1355.0505 - val_accuracy: 0.3069

Epoch 2/40

829/829 [==============================] - 15s 18ms/step - loss: 4.3456 - accuracy: 0.4089 - val_loss: 56807.1282 - val_accuracy: 0.3430

Epoch 3/40

829/829 [==============================] - 14s 17ms/step - loss: 1.9580 - accuracy: 0.4101 - val_loss: 776.6751 - val_accuracy: 0.4043

Epoch 4/40

829/829 [==============================] - 14s 17ms/step - loss: 1.2369 - accuracy: 0.4234 - val_loss: 2.1374 - val_accuracy: 0.4513

Epoch 5/40

829/829 [==============================] - 15s 18ms/step - loss: 1.0994 - accuracy: 0.4017 - val_loss: 6.5897 - val_accuracy: 0.2996

Epoch 6/40

829/829 [==============================] - 14s 17ms/step - loss: 1.0461 - accuracy: 0.4343 - val_loss: 40.6376 - val_accuracy: 0.3682

Epoch 7/40

829/829 [==============================] - 14s 17ms/step - loss: 1.0381 - accuracy: 0.4234 - val_loss: 83.7707 - val_accuracy: 0.3827

Epoch 8/40

829/829 [==============================] - 14s 17ms/step - loss: 1.1470 - accuracy: 0.4234 - val_loss: 1.0714 - val_accuracy: 0.4910

Epoch 9/40

829/829 [==============================] - 14s 17ms/step - loss: 1.0752 - accuracy: 0.4524 - val_loss: 1.1472 - val_accuracy: 0.4621

Epoch 10/40

829/829 [==============================] - 14s 17ms/step - loss: 1.0367 - accuracy: 0.4680 - val_loss: 4.5824 - val_accuracy: 0.3574

Epoch 11/40

829/829 [==============================] - 14s 17ms/step - loss: 1.0282 - accuracy: 0.4379 - val_loss: 48.4230 - val_accuracy: 0.4838

Epoch 12/40

829/829 [==============================] - 14s 17ms/step - loss: 1.0280 - accuracy: 0.4258 - val_loss: 2.9461 - val_accuracy: 0.4910

Epoch 13/40

829/829 [==============================] - 14s 17ms/step - loss: 1.0164 - accuracy: 0.4258 - val_loss: 14.7316 - val_accuracy: 0.4765

Epoch 14/40

829/829 [==============================] - 14s 17ms/step - loss: 1.0365 - accuracy: 0.4270 - val_loss: 30.3243 - val_accuracy: 0.4874

Epoch 15/40

829/829 [==============================] - 14s 17ms/step - loss: 1.0087 - accuracy: 0.4210 - val_loss: 2.2417 - val_accuracy: 0.4693

Epoch 16/40

829/829 [==============================] - 14s 17ms/step - loss: 0.9984 - accuracy: 0.4246 - val_loss: 3.3897 - val_accuracy: 0.3755

Epoch 17/40

829/829 [==============================] - 14s 17ms/step - loss: 0.9947 - accuracy: 0.4210 - val_loss: 2.5354 - val_accuracy: 0.4332

Epoch 18/40

829/829 [==============================] - 15s 18ms/step - loss: 0.9816 - accuracy: 0.4294 - val_loss: 2.5836 - val_accuracy: 0.4260

Epoch 19/40

829/829 [==============================] - 15s 18ms/step - loss: 0.9905 - accuracy: 0.4367 - val_loss: 3.7856 - val_accuracy: 0.4946

Epoch 20/40

829/829 [==============================] - 15s 18ms/step - loss: 0.9871 - accuracy: 0.4367 - val_loss: 5.0321 - val_accuracy: 0.3610

Epoch 21/40

829/829 [==============================] - 15s 17ms/step - loss: 0.9815 - accuracy: 0.4511 - val_loss: 1.1294 - val_accuracy: 0.4838

Epoch 22/40

829/829 [==============================] - 15s 17ms/step - loss: 0.9706 - accuracy: 0.4499 - val_loss: 1.0897 - val_accuracy: 0.5126

Epoch 23/40

829/829 [==============================] - 15s 17ms/step - loss: 0.9557 - accuracy: 0.4560 - val_loss: 1.1240 - val_accuracy: 0.5054

Epoch 24/40

829/829 [==============================] - 14s 17ms/step - loss: 0.9380 - accuracy: 0.4511 - val_loss: 2.8845 - val_accuracy: 0.3755

Epoch 25/40

829/829 [==============================] - 15s 18ms/step - loss: 0.9309 - accuracy: 0.4596 - val_loss: 1.5849 - val_accuracy: 0.4765

Epoch 26/40

829/829 [==============================] - 15s 18ms/step - loss: 0.9354 - accuracy: 0.4885 - val_loss: 1.0172 - val_accuracy: 0.5126

Epoch 27/40

829/829 [==============================] - 14s 17ms/step - loss: 0.8974 - accuracy: 0.5139 - val_loss: 1.3205 - val_accuracy: 0.4946

Epoch 28/40

829/829 [==============================] - 14s 17ms/step - loss: 0.8663 - accuracy: 0.5042 - val_loss: 1.4609 - val_accuracy: 0.4657

Epoch 29/40

829/829 [==============================] - 15s 17ms/step - loss: 0.8372 - accuracy: 0.5404 - val_loss: 1.1364 - val_accuracy: 0.4874

Epoch 30/40

829/829 [==============================] - 15s 18ms/step - loss: 0.7965 - accuracy: 0.5344 - val_loss: 2.7651 - val_accuracy: 0.4946

Epoch 31/40

829/829 [==============================] - 15s 18ms/step - loss: 0.7794 - accuracy: 0.5633 - val_loss: 1.0393 - val_accuracy: 0.5379

Epoch 32/40

829/829 [==============================] - 15s 18ms/step - loss: 0.7651 - accuracy: 0.5959 - val_loss: 1.7666 - val_accuracy: 0.4621

Epoch 33/40

829/829 [==============================] - 15s 18ms/step - loss: 0.7173 - accuracy: 0.6671 - val_loss: 2.4147 - val_accuracy: 0.4621

Epoch 34/40

829/829 [==============================] - 15s 18ms/step - loss: 0.6985 - accuracy: 0.6731 - val_loss: 1.9983 - val_accuracy: 0.4729

Epoch 35/40

829/829 [==============================] - 14s 17ms/step - loss: 0.6956 - accuracy: 0.7081 - val_loss: 3.1167 - val_accuracy: 0.4585

Epoch 36/40

829/829 [==============================] - 15s 18ms/step - loss: 0.6369 - accuracy: 0.7165 - val_loss: 1.8084 - val_accuracy: 0.4224

Epoch 37/40

829/829 [==============================] - 15s 18ms/step - loss: 0.5775 - accuracy: 0.7660 - val_loss: 1.4000 - val_accuracy: 0.4729

Epoch 38/40

829/829 [==============================] - 15s 18ms/step - loss: 0.5752 - accuracy: 0.7503 - val_loss: 1.7759 - val_accuracy: 0.4188

Epoch 39/40

829/829 [==============================] - 14s 17ms/step - loss: 0.5381 - accuracy: 0.7853 - val_loss: 2.1087 - val_accuracy: 0.4801

Epoch 40/40

829/829 [==============================] - 15s 18ms/step - loss: 0.4921 - accuracy: 0.7998 - val_loss: 2.5755 - val_accuracy: 0.4946

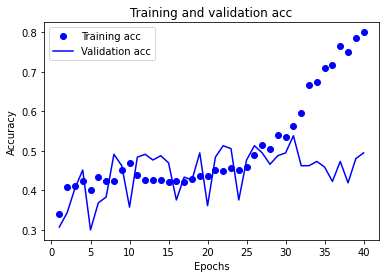

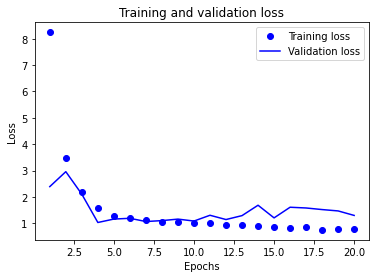

history_dict = history.history

loss = history_dict['loss']

val_loss = history_dict['val_loss']

epochs = range(1, len(loss) + 1)

plt.plot(epochs, loss, 'bo', label='Training loss')

plt.plot(epochs, val_loss, 'b', label='Validation loss')

plt.title('Training and validation loss')

plt.xlabel('Epochs')

plt.ylabel('Loss')

plt.legend()

plt.show()

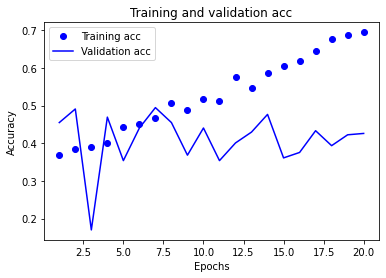

acc = history_dict['accuracy']

val_acc = history_dict['val_accuracy']

plt.plot(epochs, acc, 'bo', label='Training acc')

plt.plot(epochs, val_acc, 'b', label='Validation acc')

plt.title('Training and validation acc')

plt.xlabel('Epochs')

plt.ylabel('Accuracy')

plt.legend()

plt.show()

model.load_weights('/content/drive/Shared drives/personal/weights/resnet_model.31-0.5379.hdf5')

model.evaluate(x_test, y_test)

277/277 [==============================] - 2s 6ms/step

[1.1417856199216325, 0.47653430700302124]

c. Inception V3

def build_inception():

# keras 기본 모델 이용

base_model = inception_v3.InceptionV3(weights=None, include_top=False, input_shape=(256, 256 ,3))

output_model = base_model.output

flatten_model = layers.Flatten()(output_model)

dense_model = layers.Dense(512, activation='relu')(flatten_model)

final_model = layers.Dense(3, activation='softmax')(dense_model)

model = models.Model(inputs=base_model.input, outputs=final_model)

model.compile(optimizer=optimizers.RMSprop(lr=0.0001),

loss='categorical_crossentropy',

metrics=['accuracy'])

return model

model = build_inception()

model.name = 'inception_model'

model.summary()

Model: "inception_model"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_12 (InputLayer) (None, 256, 256, 3) 0

__________________________________________________________________________________________________

conv2d_53 (Conv2D) (None, 127, 127, 32) 864 input_12[0][0]

__________________________________________________________________________________________________

batch_normalization_1 (BatchNor (None, 127, 127, 32) 96 conv2d_53[0][0]

__________________________________________________________________________________________________

activation_295 (Activation) (None, 127, 127, 32) 0 batch_normalization_1[0][0]

__________________________________________________________________________________________________

conv2d_54 (Conv2D) (None, 125, 125, 32) 9216 activation_295[0][0]

__________________________________________________________________________________________________

batch_normalization_2 (BatchNor (None, 125, 125, 32) 96 conv2d_54[0][0]

__________________________________________________________________________________________________

activation_296 (Activation) (None, 125, 125, 32) 0 batch_normalization_2[0][0]

__________________________________________________________________________________________________

conv2d_55 (Conv2D) (None, 125, 125, 64) 18432 activation_296[0][0]

__________________________________________________________________________________________________

batch_normalization_3 (BatchNor (None, 125, 125, 64) 192 conv2d_55[0][0]

__________________________________________________________________________________________________

activation_297 (Activation) (None, 125, 125, 64) 0 batch_normalization_3[0][0]

__________________________________________________________________________________________________

max_pooling2d_18 (MaxPooling2D) (None, 62, 62, 64) 0 activation_297[0][0]

__________________________________________________________________________________________________

conv2d_56 (Conv2D) (None, 62, 62, 80) 5120 max_pooling2d_18[0][0]

__________________________________________________________________________________________________

batch_normalization_4 (BatchNor (None, 62, 62, 80) 240 conv2d_56[0][0]

__________________________________________________________________________________________________

activation_298 (Activation) (None, 62, 62, 80) 0 batch_normalization_4[0][0]

__________________________________________________________________________________________________

conv2d_57 (Conv2D) (None, 60, 60, 192) 138240 activation_298[0][0]

__________________________________________________________________________________________________

batch_normalization_5 (BatchNor (None, 60, 60, 192) 576 conv2d_57[0][0]

__________________________________________________________________________________________________

activation_299 (Activation) (None, 60, 60, 192) 0 batch_normalization_5[0][0]

__________________________________________________________________________________________________

max_pooling2d_19 (MaxPooling2D) (None, 29, 29, 192) 0 activation_299[0][0]

__________________________________________________________________________________________________

conv2d_61 (Conv2D) (None, 29, 29, 64) 12288 max_pooling2d_19[0][0]

__________________________________________________________________________________________________

batch_normalization_9 (BatchNor (None, 29, 29, 64) 192 conv2d_61[0][0]

__________________________________________________________________________________________________

activation_303 (Activation) (None, 29, 29, 64) 0 batch_normalization_9[0][0]

__________________________________________________________________________________________________

conv2d_59 (Conv2D) (None, 29, 29, 48) 9216 max_pooling2d_19[0][0]

__________________________________________________________________________________________________

conv2d_62 (Conv2D) (None, 29, 29, 96) 55296 activation_303[0][0]

__________________________________________________________________________________________________

batch_normalization_7 (BatchNor (None, 29, 29, 48) 144 conv2d_59[0][0]

__________________________________________________________________________________________________

batch_normalization_10 (BatchNo (None, 29, 29, 96) 288 conv2d_62[0][0]

__________________________________________________________________________________________________

activation_301 (Activation) (None, 29, 29, 48) 0 batch_normalization_7[0][0]

__________________________________________________________________________________________________

activation_304 (Activation) (None, 29, 29, 96) 0 batch_normalization_10[0][0]

__________________________________________________________________________________________________

average_pooling2d_1 (AveragePoo (None, 29, 29, 192) 0 max_pooling2d_19[0][0]

__________________________________________________________________________________________________

conv2d_58 (Conv2D) (None, 29, 29, 64) 12288 max_pooling2d_19[0][0]

__________________________________________________________________________________________________

conv2d_60 (Conv2D) (None, 29, 29, 64) 76800 activation_301[0][0]

__________________________________________________________________________________________________

conv2d_63 (Conv2D) (None, 29, 29, 96) 82944 activation_304[0][0]

__________________________________________________________________________________________________

conv2d_64 (Conv2D) (None, 29, 29, 32) 6144 average_pooling2d_1[0][0]

__________________________________________________________________________________________________

batch_normalization_6 (BatchNor (None, 29, 29, 64) 192 conv2d_58[0][0]

__________________________________________________________________________________________________

batch_normalization_8 (BatchNor (None, 29, 29, 64) 192 conv2d_60[0][0]

__________________________________________________________________________________________________

batch_normalization_11 (BatchNo (None, 29, 29, 96) 288 conv2d_63[0][0]

__________________________________________________________________________________________________

batch_normalization_12 (BatchNo (None, 29, 29, 32) 96 conv2d_64[0][0]

__________________________________________________________________________________________________

activation_300 (Activation) (None, 29, 29, 64) 0 batch_normalization_6[0][0]

__________________________________________________________________________________________________

activation_302 (Activation) (None, 29, 29, 64) 0 batch_normalization_8[0][0]

__________________________________________________________________________________________________

activation_305 (Activation) (None, 29, 29, 96) 0 batch_normalization_11[0][0]

__________________________________________________________________________________________________

activation_306 (Activation) (None, 29, 29, 32) 0 batch_normalization_12[0][0]

__________________________________________________________________________________________________

mixed0 (Concatenate) (None, 29, 29, 256) 0 activation_300[0][0]

activation_302[0][0]

activation_305[0][0]

activation_306[0][0]

__________________________________________________________________________________________________

conv2d_68 (Conv2D) (None, 29, 29, 64) 16384 mixed0[0][0]

__________________________________________________________________________________________________

batch_normalization_16 (BatchNo (None, 29, 29, 64) 192 conv2d_68[0][0]

__________________________________________________________________________________________________

activation_310 (Activation) (None, 29, 29, 64) 0 batch_normalization_16[0][0]

__________________________________________________________________________________________________

conv2d_66 (Conv2D) (None, 29, 29, 48) 12288 mixed0[0][0]

__________________________________________________________________________________________________

conv2d_69 (Conv2D) (None, 29, 29, 96) 55296 activation_310[0][0]

__________________________________________________________________________________________________

batch_normalization_14 (BatchNo (None, 29, 29, 48) 144 conv2d_66[0][0]

__________________________________________________________________________________________________

batch_normalization_17 (BatchNo (None, 29, 29, 96) 288 conv2d_69[0][0]

__________________________________________________________________________________________________

activation_308 (Activation) (None, 29, 29, 48) 0 batch_normalization_14[0][0]

__________________________________________________________________________________________________

activation_311 (Activation) (None, 29, 29, 96) 0 batch_normalization_17[0][0]

__________________________________________________________________________________________________

average_pooling2d_2 (AveragePoo (None, 29, 29, 256) 0 mixed0[0][0]

__________________________________________________________________________________________________

conv2d_65 (Conv2D) (None, 29, 29, 64) 16384 mixed0[0][0]

__________________________________________________________________________________________________

conv2d_67 (Conv2D) (None, 29, 29, 64) 76800 activation_308[0][0]

__________________________________________________________________________________________________

conv2d_70 (Conv2D) (None, 29, 29, 96) 82944 activation_311[0][0]

__________________________________________________________________________________________________

conv2d_71 (Conv2D) (None, 29, 29, 64) 16384 average_pooling2d_2[0][0]

__________________________________________________________________________________________________

batch_normalization_13 (BatchNo (None, 29, 29, 64) 192 conv2d_65[0][0]

__________________________________________________________________________________________________

batch_normalization_15 (BatchNo (None, 29, 29, 64) 192 conv2d_67[0][0]

__________________________________________________________________________________________________

batch_normalization_18 (BatchNo (None, 29, 29, 96) 288 conv2d_70[0][0]

__________________________________________________________________________________________________

batch_normalization_19 (BatchNo (None, 29, 29, 64) 192 conv2d_71[0][0]

__________________________________________________________________________________________________

activation_307 (Activation) (None, 29, 29, 64) 0 batch_normalization_13[0][0]

__________________________________________________________________________________________________

activation_309 (Activation) (None, 29, 29, 64) 0 batch_normalization_15[0][0]

__________________________________________________________________________________________________

activation_312 (Activation) (None, 29, 29, 96) 0 batch_normalization_18[0][0]

__________________________________________________________________________________________________

activation_313 (Activation) (None, 29, 29, 64) 0 batch_normalization_19[0][0]

__________________________________________________________________________________________________

mixed1 (Concatenate) (None, 29, 29, 288) 0 activation_307[0][0]

activation_309[0][0]

activation_312[0][0]

activation_313[0][0]

__________________________________________________________________________________________________

conv2d_75 (Conv2D) (None, 29, 29, 64) 18432 mixed1[0][0]

__________________________________________________________________________________________________

batch_normalization_23 (BatchNo (None, 29, 29, 64) 192 conv2d_75[0][0]

__________________________________________________________________________________________________

activation_317 (Activation) (None, 29, 29, 64) 0 batch_normalization_23[0][0]

__________________________________________________________________________________________________

conv2d_73 (Conv2D) (None, 29, 29, 48) 13824 mixed1[0][0]

__________________________________________________________________________________________________

conv2d_76 (Conv2D) (None, 29, 29, 96) 55296 activation_317[0][0]

__________________________________________________________________________________________________

batch_normalization_21 (BatchNo (None, 29, 29, 48) 144 conv2d_73[0][0]

__________________________________________________________________________________________________

batch_normalization_24 (BatchNo (None, 29, 29, 96) 288 conv2d_76[0][0]

__________________________________________________________________________________________________

activation_315 (Activation) (None, 29, 29, 48) 0 batch_normalization_21[0][0]

__________________________________________________________________________________________________

activation_318 (Activation) (None, 29, 29, 96) 0 batch_normalization_24[0][0]

__________________________________________________________________________________________________

average_pooling2d_3 (AveragePoo (None, 29, 29, 288) 0 mixed1[0][0]

__________________________________________________________________________________________________

conv2d_72 (Conv2D) (None, 29, 29, 64) 18432 mixed1[0][0]

__________________________________________________________________________________________________

conv2d_74 (Conv2D) (None, 29, 29, 64) 76800 activation_315[0][0]

__________________________________________________________________________________________________

conv2d_77 (Conv2D) (None, 29, 29, 96) 82944 activation_318[0][0]

__________________________________________________________________________________________________

conv2d_78 (Conv2D) (None, 29, 29, 64) 18432 average_pooling2d_3[0][0]

__________________________________________________________________________________________________

batch_normalization_20 (BatchNo (None, 29, 29, 64) 192 conv2d_72[0][0]

__________________________________________________________________________________________________

batch_normalization_22 (BatchNo (None, 29, 29, 64) 192 conv2d_74[0][0]

__________________________________________________________________________________________________

batch_normalization_25 (BatchNo (None, 29, 29, 96) 288 conv2d_77[0][0]

__________________________________________________________________________________________________

batch_normalization_26 (BatchNo (None, 29, 29, 64) 192 conv2d_78[0][0]

__________________________________________________________________________________________________

activation_314 (Activation) (None, 29, 29, 64) 0 batch_normalization_20[0][0]

__________________________________________________________________________________________________

activation_316 (Activation) (None, 29, 29, 64) 0 batch_normalization_22[0][0]

__________________________________________________________________________________________________

activation_319 (Activation) (None, 29, 29, 96) 0 batch_normalization_25[0][0]

__________________________________________________________________________________________________

activation_320 (Activation) (None, 29, 29, 64) 0 batch_normalization_26[0][0]

__________________________________________________________________________________________________

mixed2 (Concatenate) (None, 29, 29, 288) 0 activation_314[0][0]

activation_316[0][0]

activation_319[0][0]

activation_320[0][0]

__________________________________________________________________________________________________

conv2d_80 (Conv2D) (None, 29, 29, 64) 18432 mixed2[0][0]

__________________________________________________________________________________________________

batch_normalization_28 (BatchNo (None, 29, 29, 64) 192 conv2d_80[0][0]

__________________________________________________________________________________________________

activation_322 (Activation) (None, 29, 29, 64) 0 batch_normalization_28[0][0]

__________________________________________________________________________________________________

conv2d_81 (Conv2D) (None, 29, 29, 96) 55296 activation_322[0][0]

__________________________________________________________________________________________________

batch_normalization_29 (BatchNo (None, 29, 29, 96) 288 conv2d_81[0][0]

__________________________________________________________________________________________________

activation_323 (Activation) (None, 29, 29, 96) 0 batch_normalization_29[0][0]

__________________________________________________________________________________________________

conv2d_79 (Conv2D) (None, 14, 14, 384) 995328 mixed2[0][0]